Building on our previous posts, in this post we will show how people and groups functionality works, and how to persist faces to Microsoft Azure.

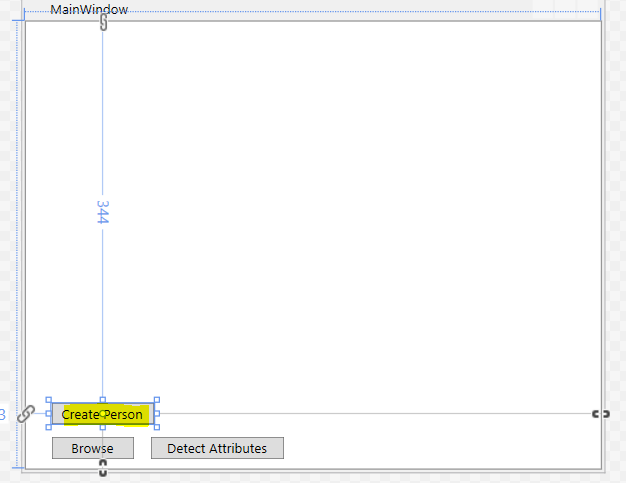

In our WPF application, add a new button called Create Person:

On pressing this button, we will do the following:

- We will create a new Person Group, called “My People Group”, using PersonGroup.CreateAsync. We will also set the GUID of this group

- We will create a new Person, called “Bob Smith”, using PersonGroupPerson.CreateAsync. We will attach this person to our group

- Once Bob Smith is attached to our group, we will attach a face to Bob Smith using PersonGroupPerson.AddFaceFromStreamAsync. This will be persisted in Azure storage

- We will then loop through all groups using PersonGroup.ListAsync, and view all people in each group through PersonGroupPerson.ListAsync

- Finally, we will delete each group using PersonGroup.DeleteAsync

Below is the full source, carrying on from our previous code.

using Microsoft.Azure.CognitiveServices.Vision.Face;

using Microsoft.Azure.CognitiveServices.Vision.Face.Models;

using System;

using System.Collections.Generic;

using System.IO;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using System.Windows;

using System.Windows.Controls;

using System.Windows.Data;

using System.Windows.Documents;

using System.Windows.Input;

using System.Windows.Media;

using System.Windows.Media.Imaging;

using System.Windows.Navigation;

using System.Windows.Shapes;

namespace Carl.FaceAPI

{

///

<summary>

/// Interaction logic for MainWindow.xaml

/// </summary>

public partial class MainWindow : Window

{

private const string subscriptionKey = "yourkey";

private const string baseUri =

"https://yourregion.api.cognitive.microsoft.com/";

private readonly IFaceClient faceClient = new FaceClient(

new ApiKeyServiceClientCredentials(subscriptionKey),

new System.Net.Http.DelegatingHandler[] { });

IList<DetectedFace> faceList;

String[] faceDescriptions;

double resizeFactor;

public MainWindow()

{

InitializeComponent();

faceClient.Endpoint = baseUri;

}

private async void btnBrowse_Click(object sender, RoutedEventArgs e)

{

var dialog = new Microsoft.Win32.OpenFileDialog();

dialog.Filter = "JPG (*.jpg)|*.jpg";

bool? result = dialog.ShowDialog(this);

if (!(bool)result)

{

return;

}

string filePath = dialog.FileName;

Uri uriSource = new Uri(filePath);

BitmapImage source = new BitmapImage();

source.BeginInit();

source.CacheOption = BitmapCacheOption.None;

source.UriSource = uriSource;

source.EndInit();

Image1.Source = source;

faceList = await UploadAndDetectFaces(filePath);

if (faceList.Count > 0)

{

DrawingVisual visual = new DrawingVisual();

DrawingContext context = visual.RenderOpen();

context.DrawImage(source, new Rect(0, 0, source.Width, source.Height));

double dpi = source.DpiX;

resizeFactor = (dpi > 0) ? 96 / dpi : 1;

faceDescriptions = new String[faceList.Count];

for (int i = 0; i < faceList.Count; ++i)

{

DetectedFace face = faceList[i];

context.DrawRectangle(

Brushes.Transparent,

new Pen(Brushes.Green, 5),

new Rect(

face.FaceRectangle.Left * resizeFactor,

face.FaceRectangle.Top * resizeFactor,

face.FaceRectangle.Width * resizeFactor,

face.FaceRectangle.Height * resizeFactor

)

);

}

context.Close();

RenderTargetBitmap facewithRectangle = new RenderTargetBitmap(

(int)(source.PixelWidth * resizeFactor),

(int)(source.PixelHeight * resizeFactor),

96,

96,

PixelFormats.Default);

facewithRectangle.Render(visual);

Image1.Source = facewithRectangle;

}

}

private async Task<IList<DetectedFace>> UploadAndDetectFaces(string imageFilePath)

{

try

{

IList<FaceAttributeType> faceAttributes =

new FaceAttributeType[]

{

FaceAttributeType.Age,

FaceAttributeType.Blur,

FaceAttributeType.Emotion,

FaceAttributeType.FacialHair,

FaceAttributeType.Gender,

FaceAttributeType.Glasses,

FaceAttributeType.Hair,

FaceAttributeType.HeadPose,

FaceAttributeType.Makeup,

FaceAttributeType.Noise,

FaceAttributeType.Occlusion,

FaceAttributeType.Smile

};

using (Stream imageFileStream = File.OpenRead(imageFilePath))

{

IList<DetectedFace> faceList =

await faceClient.Face.DetectWithStreamAsync(

imageFileStream, true, false, faceAttributes);

return faceList;

}

}

catch (APIErrorException f)

{

MessageBox.Show(f.Message);

return new List<DetectedFace>();

}

catch (Exception e)

{

MessageBox.Show(e.Message, "Error");

return new List<DetectedFace>();

}

}

private void btnDetectAttributes_Click(object sender, RoutedEventArgs e)

{

ImageSource imageSource = Image1.Source;

BitmapSource bitmapSource = (BitmapSource)imageSource;

var scale = Image1.ActualWidth / (bitmapSource.PixelWidth / resizeFactor);

for (int i = 0; i < faceList.Count; ++i) { FaceRectangle fr = faceList[i].FaceRectangle; double left = fr.Left * scale; double top = fr.Top * scale; double width = fr.Width * scale; double height = fr.Height * scale; faceDescriptions[i] = FaceDescription(faceList[i]); TextBlock1.Text = TextBlock1.Text + string.Format("Person {0}: {1} \n", i.ToString(), faceDescriptions[i]); } } private string FaceDescription(DetectedFace face) { StringBuilder sb = new StringBuilder(); sb.Append(face.FaceAttributes.Gender); sb.Append(", "); sb.Append(face.FaceAttributes.Age); sb.Append(", "); sb.Append(String.Format("smile {0:F1}%, ", face.FaceAttributes.Smile * 100)); sb.Append("Emotion: "); Emotion emotionScores = face.FaceAttributes.Emotion; if (emotionScores.Anger >= 0.1f)

sb.Append(String.Format("anger {0:F1}%, ", emotionScores.Anger * 100));

if (emotionScores.Contempt >= 0.1f)

sb.Append(String.Format("contempt {0:F1}%, ", emotionScores.Contempt * 100));

if (emotionScores.Disgust >= 0.1f)

sb.Append(String.Format("disgust {0:F1}%, ", emotionScores.Disgust * 100));

if (emotionScores.Fear >= 0.1f)

sb.Append(String.Format("fear {0:F1}%, ", emotionScores.Fear * 100));

if (emotionScores.Happiness >= 0.1f)

sb.Append(String.Format("happiness {0:F1}%, ", emotionScores.Happiness * 100));

if (emotionScores.Neutral >= 0.1f)

sb.Append(String.Format("neutral {0:F1}%, ", emotionScores.Neutral * 100));

if (emotionScores.Sadness >= 0.1f)

sb.Append(String.Format("sadness {0:F1}%, ", emotionScores.Sadness * 100));

if (emotionScores.Surprise >= 0.1f)

sb.Append(String.Format("surprise {0:F1}%, ", emotionScores.Surprise * 100));

sb.Append(face.FaceAttributes.Glasses);

sb.Append(", ");

sb.Append("Hair: ");

if (face.FaceAttributes.Hair.Bald >= 0.01f)

sb.Append(String.Format("bald {0:F1}% ", face.FaceAttributes.Hair.Bald * 100));

IList<HairColor> hairColors = face.FaceAttributes.Hair.HairColor;

foreach (HairColor hairColor in hairColors)

{

if (hairColor.Confidence >= 0.1f)

{

sb.Append(hairColor.Color.ToString());

sb.Append(String.Format(" {0:F1}% ", hairColor.Confidence * 100));

}

}

sb.Append(string.Format("Blur: {0}", face.FaceAttributes.Blur.BlurLevel));

sb.Append(string.Format("Facial Hair: {0}", face.FaceAttributes.FacialHair.Beard));

sb.Append(string.Format("Head Pose: {0}", face.FaceAttributes.HeadPose.Roll));

sb.Append(string.Format("Makeup: {0}", face.FaceAttributes.Makeup.LipMakeup));

sb.Append(string.Format("Noise: {0}", face.FaceAttributes.Noise.NoiseLevel));

sb.Append(string.Format("Occlusion: {0}", face.FaceAttributes.Occlusion.ForeheadOccluded));

return sb.ToString();

}

private async void btnCreatePerson_Click(object sender, RoutedEventArgs e)

{

var dialog = new Microsoft.Win32.OpenFileDialog();

dialog.Filter = "JPG (*.jpg)|*.jpg";

bool? result = dialog.ShowDialog(this);

if (!(bool)result)

{

return;

}

string filePath = dialog.FileName;

Uri uriSource = new Uri(filePath);

BitmapImage source = new BitmapImage();

source.BeginInit();

source.CacheOption = BitmapCacheOption.None;

source.UriSource = uriSource;

source.EndInit();

Image1.Source = source;

CreatePersonandGroup(filePath);

}

private async void CreatePersonandGroup(string imageFilePath)

{

try

{

// Create Person group

string persongroupName = "My People Group";

string persongroupPersonGroupId = Guid.NewGuid().ToString();

await faceClient.PersonGroup.CreateAsync(persongroupPersonGroupId, persongroupName);

// Create person

string personName = "Bob Smith";

string personId = Guid.NewGuid().ToString();

Person p = await faceClient.PersonGroupPerson.CreateAsync(persongroupPersonGroupId, personName);

using (Stream imageFileStream = File.OpenRead(imageFilePath))

{

await faceClient.PersonGroupPerson.AddFaceFromStreamAsync(

persongroupPersonGroupId, p.PersonId, imageFileStream);

}

// Get Groups and Person

IList<PersonGroup> persongroupList = await faceClient.PersonGroup.ListAsync();

foreach (PersonGroup pg in persongroupList)

{

IList<Person> people = await faceClient.PersonGroupPerson.ListAsync(pg.PersonGroupId);

foreach (Person person in people)

{

// Do something with each person

}

// Delete person group

await faceClient.PersonGroup.DeleteAsync(pg.PersonGroupId);

}

}

catch (APIErrorException f)

{

MessageBox.Show(f.Message);

}

catch (Exception e)

{

MessageBox.Show(e.Message, "Error");

}

}

}

}

THANKS FOR READING. BEFORE YOU LEAVE, I NEED YOUR HELP.

I AM SPENDING MORE TIME THESE DAYS CREATING YOUTUBE VIDEOS TO HELP PEOPLE LEARN THE MICROSOFT POWER PLATFORM.

IF YOU WOULD LIKE TO SEE HOW I BUILD APPS, OR FIND SOMETHING USEFUL READING MY BLOG, I WOULD REALLY APPRECIATE YOU SUBSCRIBING TO MY YOUTUBE CHANNEL.

THANK YOU, AND LET'S KEEP LEARNING TOGETHER.

CARL