In this post we will go through an example of using Azure’s Cognitive Services Face Recognition functionality. This app will be built in C# and will take a given photo/image and upload it to Azure Cognitive Services, then determine what are faces within the image using DetectWithStreamAsync, and finally draw a rectangle around those faces.

Let’s build the app.

First you will need to sign up for an Azure Cognitive Services API key for Face.

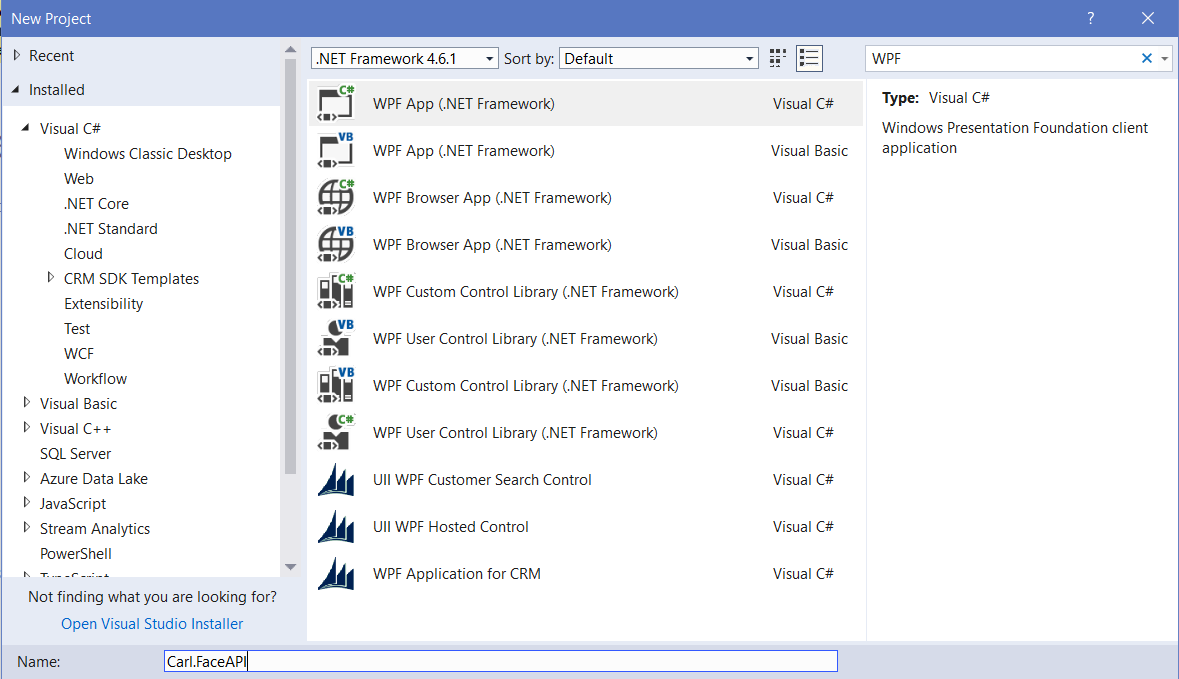

Now in Visual Studio, create a new WPF app:

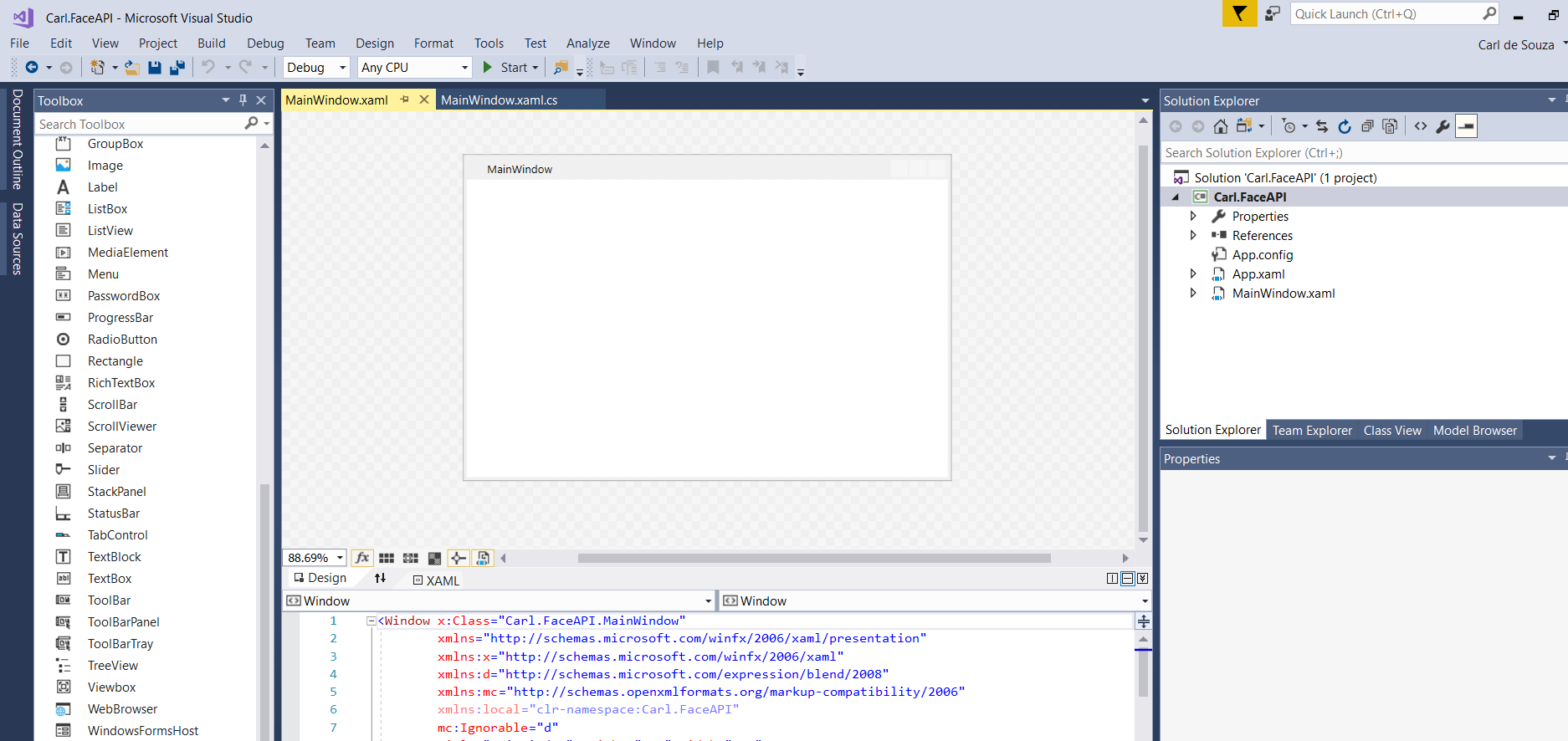

You will see:

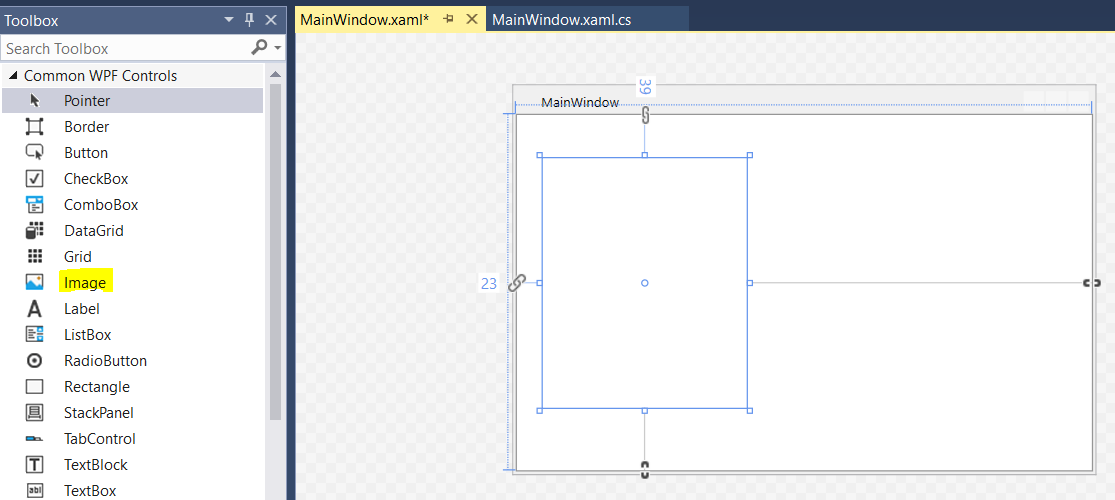

Drag an image control, Image1, onto the form and resize it accordingly:

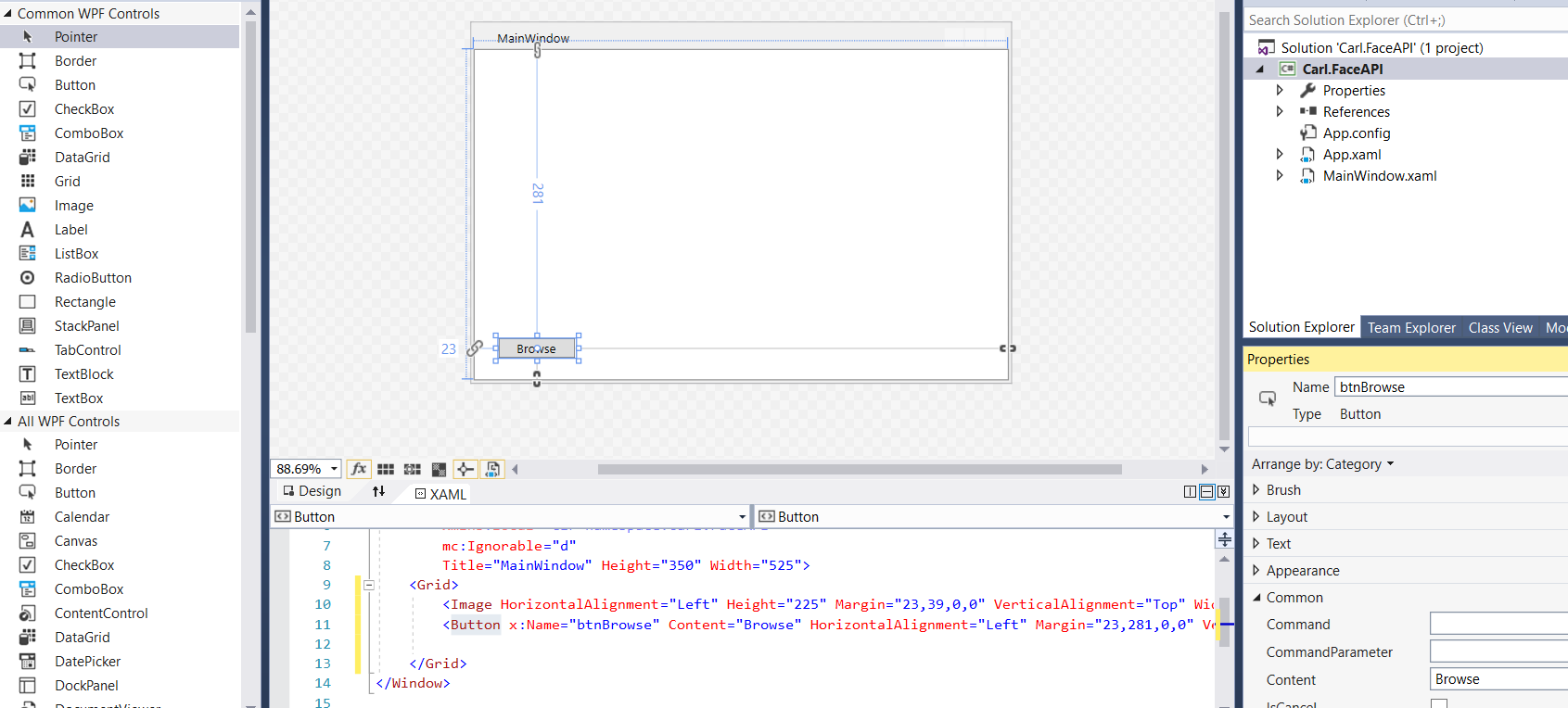

Add a Browse button:

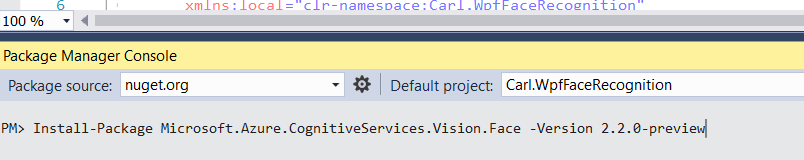

Open NuGet and install the package:

Install-Package Microsoft.Azure.CognitiveServices.Vision.Face -Version 2.2.0-preview

Now, double click on the Browser button and change the method to async:

![]()

We will be using:

- Microsoft.Azure.CognitiveServices.Vision.Face

- Microsoft.Azure.CognitiveServices.Vision.Face.Models

Add the code to MainWindows.xaml.cs and add your subscription key and base URI from above. This code uses the Face API’s DetectWithStreamAsync method to determine the faces in a photo:

using Microsoft.Azure.CognitiveServices.Vision.Face;

using Microsoft.Azure.CognitiveServices.Vision.Face.Models;

using System;

using System.Collections.Generic;

using System.IO;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using System.Windows;

using System.Windows.Controls;

using System.Windows.Data;

using System.Windows.Documents;

using System.Windows.Input;

using System.Windows.Media;

using System.Windows.Media.Imaging;

using System.Windows.Navigation;

using System.Windows.Shapes;

namespace Carl.FaceAPI

{

///

<summary>

/// Interaction logic for MainWindow.xaml

/// </summary>

public partial class MainWindow : Window

{

private const string subscriptionKey = "yourapikey";

private const string baseUri =

"yoururl"; // E.g. https://northeurope.api.cognitive.microsoft.com/

private readonly IFaceClient faceClient = new FaceClient(

new ApiKeyServiceClientCredentials(subscriptionKey),

new System.Net.Http.DelegatingHandler[] { });

IList<DetectedFace> faceList;

String[] faceDescriptions;

double resizeFactor;

public MainWindow()

{

InitializeComponent();

faceClient.Endpoint = baseUri;

}

private async void btnBrowse_Click(object sender, RoutedEventArgs e)

{

var dialog = new Microsoft.Win32.OpenFileDialog();

dialog.Filter = "JPG (*.jpg)|*.jpg";

bool? result = dialog.ShowDialog(this);

if (!(bool)result)

{

return;

}

string filePath = dialog.FileName;

Uri uriSource = new Uri(filePath);

BitmapImage source = new BitmapImage();

source.BeginInit();

source.CacheOption = BitmapCacheOption.None;

source.UriSource = uriSource;

source.EndInit();

Image1.Source = source;

faceList = await UploadAndDetectFaces(filePath);

if (faceList.Count > 0)

{

DrawingVisual visual = new DrawingVisual();

DrawingContext context = visual.RenderOpen();

context.DrawImage(source, new Rect(0, 0, source.Width, source.Height));

double dpi = source.DpiX;

resizeFactor = (dpi > 0) ? 96 / dpi : 1;

faceDescriptions = new String[faceList.Count];

for (int i = 0; i < faceList.Count; ++i)

{

DetectedFace face = faceList[i];

context.DrawRectangle(

Brushes.Transparent,

new Pen(Brushes.Green, 5),

new Rect(

face.FaceRectangle.Left * resizeFactor,

face.FaceRectangle.Top * resizeFactor,

face.FaceRectangle.Width * resizeFactor,

face.FaceRectangle.Height * resizeFactor

)

);

}

context.Close();

RenderTargetBitmap facewithRectangle = new RenderTargetBitmap(

(int)(source.PixelWidth * resizeFactor),

(int)(source.PixelHeight * resizeFactor),

96,

96,

PixelFormats.Default);

facewithRectangle.Render(visual);

Image1.Source = facewithRectangle;

}

}

private async Task<IList<DetectedFace>> UploadAndDetectFaces(string imageFilePath)

{

try

{

using (Stream imageFileStream = File.OpenRead(imageFilePath))

{

IList<DetectedFace> faceList =

await faceClient.Face.DetectWithStreamAsync(

imageFileStream, true, false, null);

return faceList;

}

}

catch (APIErrorException f)

{

MessageBox.Show(f.Message);

return new List<DetectedFace>();

}

catch (Exception e)

{

MessageBox.Show(e.Message, "Error");

return new List<DetectedFace>();

}

}

}

}

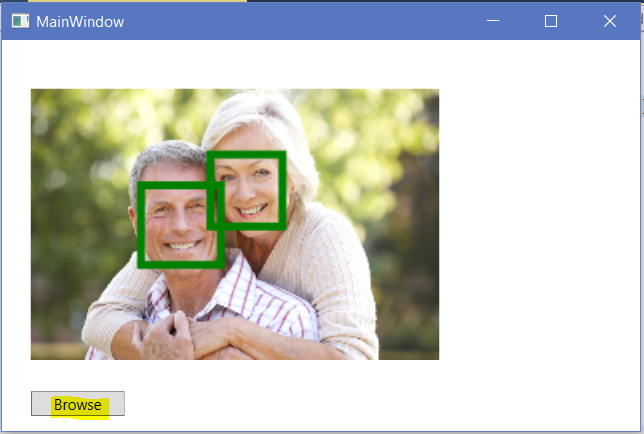

Run the code and press Browse. You will see the API has captured 2 faces:

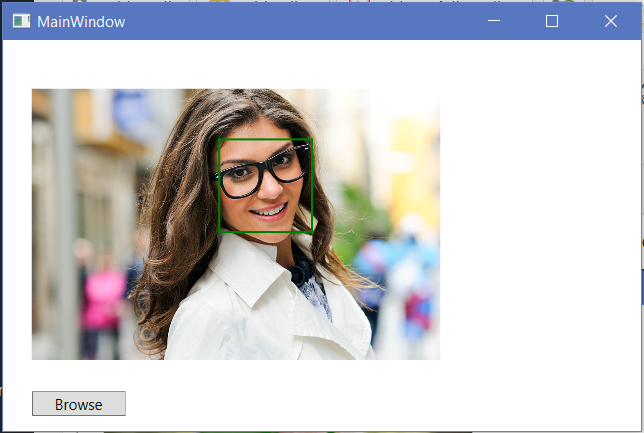

Try again with a photo of one person:

I AM SPENDING MORE TIME THESE DAYS CREATING YOUTUBE VIDEOS TO HELP PEOPLE LEARN THE MICROSOFT POWER PLATFORM.

IF YOU WOULD LIKE TO SEE HOW I BUILD APPS, OR FIND SOMETHING USEFUL READING MY BLOG, I WOULD REALLY APPRECIATE YOU SUBSCRIBING TO MY YOUTUBE CHANNEL.

THANK YOU, AND LET'S KEEP LEARNING TOGETHER.

CARL

Por favor,

o Package Microsoft.Azure.CognitiveServices.Vision.Face não está mais disponivel para instalação no nuget.

Como proceder?

Please,

the Microsoft.Azure.CognitiveServices.Vision.Face Package is no longer available for installation in the nuget.

How to proceed?

Hey Jose,

You need to install Microsoft.Azure.CognitiveServices.Vision.Face and its available. Try it!

Best,

Feroz.

Guget comand line:

Install-Package Microsoft.Azure.CognitiveServices.Vision.Face -Version 2.2.0-preview

Hello. I have implemented the code in my WPF project however when I run and upload an image, I get the exception “Invalid URI: The format of the URI could not be determined.”

Thanks a lot, it works pretty fine…

Mike